“Meta Content Regulation: Navigating the Complexities of Online Speech

Related Articles Meta Content Regulation: Navigating the Complexities of Online Speech

- Masterful Security Incident Management: Shielding Your Digital Fortress

- Automate Your Threat Response: Enhanced Security with Cutting-Edge Technology

- Fairfield Bitcoin Scam Suspect: A Deep Dive Into The Allegations And Implications

- Early Warning System: Proactive Security Breach Detection for Enhanced Cybersecurity

- Tesla Autonomous Vehicle Update: Progress, Challenges, And Future Outlook

Introduction

With great enthusiasm, let’s explore interesting topics related to Meta Content Regulation: Navigating the Complexities of Online Speech. Let’s knit interesting information and provide new insights to readers.

Table of Content

Meta Content Regulation: Navigating the Complexities of Online Speech

In the digital age, the proliferation of social media platforms has revolutionized the way we communicate, share information, and engage with the world. Among these platforms, Meta, formerly known as Facebook, stands as a global behemoth, connecting billions of users across diverse cultures and backgrounds. However, this vast reach also brings forth significant challenges, particularly in the realm of content regulation.

The regulation of content on Meta is a multifaceted and often contentious issue, encompassing concerns about freedom of expression, hate speech, misinformation, and the potential for real-world harm. As a private entity, Meta operates under its own terms of service and community standards, which outline the types of content that are prohibited or restricted on its platforms. However, the application and enforcement of these standards are subject to ongoing debate and scrutiny.

The Balancing Act: Freedom of Expression vs. Content Moderation

At the heart of the debate surrounding Meta content regulation lies the delicate balance between freedom of expression and the need to protect users from harmful content. Freedom of expression is a fundamental human right, enshrined in international law and recognized as essential for a democratic society. However, this right is not absolute and is subject to certain limitations, particularly when it comes to speech that incites violence, promotes discrimination, or endangers public safety.

Meta faces the challenge of striking this balance while operating on a global scale, where cultural norms and legal frameworks vary widely. What may be considered acceptable speech in one country could be deemed offensive or illegal in another. This necessitates a nuanced approach to content regulation, taking into account local contexts and legal requirements.

The Challenges of Content Moderation at Scale

Given the sheer volume of content generated on Meta platforms daily, content moderation presents a formidable challenge. Meta employs a combination of automated systems and human reviewers to identify and remove content that violates its policies. However, these systems are not foolproof and can be prone to errors, leading to both over-removal of legitimate content and under-removal of harmful content.

Automated systems, such as artificial intelligence (AI) algorithms, can be effective at detecting certain types of content, such as hate speech or spam. However, they often struggle with nuanced language, sarcasm, and cultural context, which can lead to misinterpretations and inaccurate classifications. Human reviewers, on the other hand, can provide a more nuanced assessment of content but are limited by the sheer volume of content that needs to be reviewed.

The Role of Government Regulation

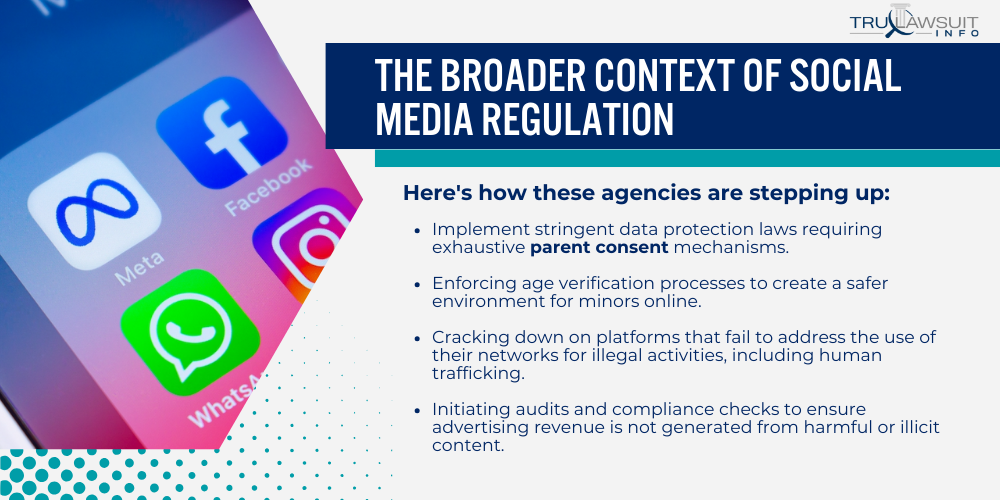

In recent years, there has been growing pressure on governments to regulate social media platforms like Meta to address concerns about harmful content and misinformation. Some countries have enacted laws that require platforms to remove illegal content within a certain timeframe or face penalties. Others have proposed regulations that would hold platforms liable for the content posted by their users.

The debate over government regulation of social media platforms is complex, with proponents arguing that it is necessary to protect users from harm and ensure accountability, while opponents argue that it could stifle freedom of expression and lead to censorship. Striking the right balance between government oversight and platform autonomy is crucial to avoid unintended consequences.

The Impact of Meta Content Regulation on Society

Meta content regulation has a profound impact on society, shaping the online discourse and influencing public opinion. The types of content that are allowed or prohibited on Meta platforms can affect the way people perceive the world, the information they have access to, and the opinions they form.

The removal of certain types of content, such as hate speech or misinformation, can help to create a more inclusive and informed online environment. However, it can also lead to accusations of censorship and bias, particularly if the decisions about what content to remove are perceived as arbitrary or politically motivated.

The Future of Meta Content Regulation

The future of Meta content regulation is likely to be shaped by several factors, including technological advancements, evolving social norms, and regulatory pressures. As AI technology continues to develop, it may become more effective at detecting and removing harmful content. However, it is also important to address the potential biases and limitations of AI algorithms.

Evolving social norms will also play a role in shaping content regulation policies. As society becomes more aware of the harms caused by online hate speech, misinformation, and other forms of harmful content, there may be greater pressure on platforms to take stronger action.

Regulatory pressures from governments around the world are also likely to increase in the coming years. As governments grapple with the challenges of regulating social media platforms, they may enact new laws and regulations that require platforms to take greater responsibility for the content posted by their users.

Key Considerations for Meta Content Regulation

As Meta continues to grapple with the challenges of content regulation, several key considerations should be taken into account:

- Transparency: Meta should be transparent about its content regulation policies and how they are enforced. This includes providing clear explanations of the types of content that are prohibited or restricted, as well as the processes for reporting and appealing content decisions.

- Accountability: Meta should be accountable for its content regulation decisions. This includes having mechanisms in place to address complaints and appeals, as well as being transparent about the data used to make content decisions.

- Consistency: Meta should strive for consistency in its content regulation policies and their enforcement. This means applying the same standards to all users and content, regardless of their political affiliation or viewpoint.

- Nuance: Meta should recognize the nuances of language, culture, and context when making content regulation decisions. This includes taking into account the intent behind the content, as well as the potential impact it could have on different communities.

- Collaboration: Meta should collaborate with experts, civil society organizations, and governments to develop effective content regulation policies. This includes seeking input from diverse perspectives and taking into account the potential impact of content regulation decisions on different communities.

Conclusion

Meta content regulation is a complex and multifaceted issue that requires careful consideration of freedom of expression, content moderation, government regulation, and societal impact. As Meta continues to evolve and adapt to the changing digital landscape, it is crucial that it prioritizes transparency, accountability, consistency, nuance, and collaboration in its content regulation policies. By doing so, Meta can help to create a more inclusive, informed, and safe online environment for its billions of users around the world.